How to assess the validation of a device for HRV analysis

Learn to determine if you can trust a certain device or app.

A few days ago I was reading this paper, describing the development of earbuds using ballistocardiography (BCG) to measure heart rate variability (HRV), and then estimate stress.

BCG tends to provide lower quality data with respect to electrocardiography (ECG) but also photoplethysmography (PPG), or optical sensing typically used in phones and wearables. BCG relies simply on movement, using an accelerometer, and this method is very prone to artifacts and noise, even more than optical measurements.

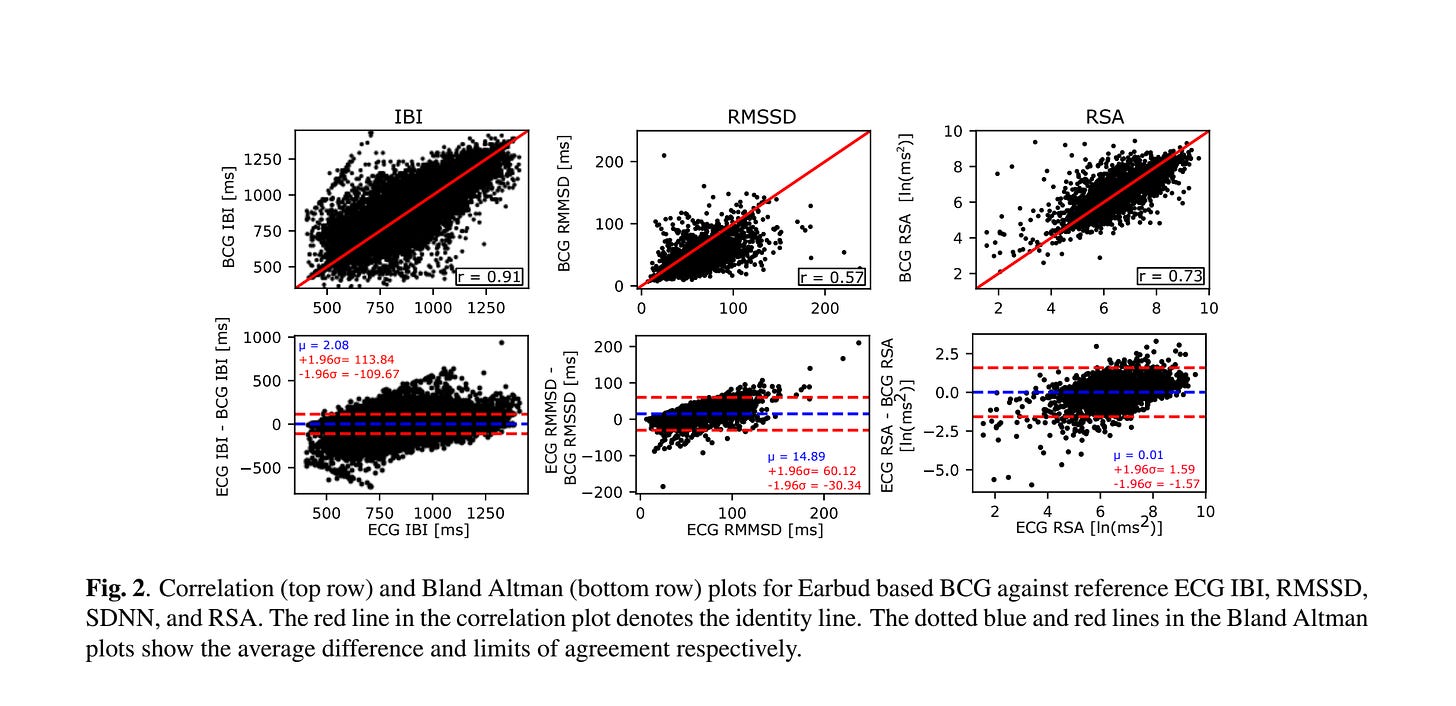

The data collected with the earbuds is indeed of very poor quality, demonstrating how this technology cannot be trusted for HRV analysis:

I will clarify better below what you should spot from the plots above, in case this is new to you so that you can better evaluate future devices without necessarily trusting the researcher’s statements (or worse, the company’s marketing material), which tend to be overly optimistic, so to speak.

When a new device claiming to measure HRV is released on the market, we should look for validations in terms of the accuracy of:

RR intervals: time intervals between consecutive heartbeats (these can be called PP intervals for optical sensors, as we look at the peaks in the PPG signal, just like you see in HRV4Training using the camera)

rMSSD, one of the HRV indices (the most useful in my opinion, as a marker of parasympathetic activity)

A validation doesn't mean that the data is useful, we also need a good protocol for that to be the case (i.e. avoid continuous measurements, make sure to measure in a reproducible context such as first thing in the morning, and all other things I often discuss here).

Back to the validation: still, a validation is necessary, as otherwise we can't make use of the data even with a good protocol, if the data is inaccurate.

How do we carry out a validation?

Normally we take measurements at the same time with our newly developed sensor or technology, and with a reference system. A reference system is a system that is normally used for the task, but might be less comfortable or more expensive. For HRV, that's an electrocardiogram, or ECG. For example, below is our setup when we validated HRV4Training, you can see the phone being used (flash light on), a Polar strap, and a medical grade ECG, all measuring at the same time:

Once we have taken measurements at the same time, in a number of people, covering a broad range of resting heart rates and HRVs, we plot them against each other, and we look at the relationship.

What do we want to see?

We want to see points close to the diagonal line (also called the identity line) that is cutting the plot in a half. If that happens, it means that for a given value of HRV derived from ECG, we have a very similar value of HRV as derived from another sensor.

If instead, we have that the points are far from this identity line, it means the new method or device is not very accurate. A typical pattern of a very noisy measurement, is the "cloud of points". This is what we see for example in these new earbuds that have been developed (among others) by Samsung, using ballistocardiography (BCG).

If you look at the second plot above, the rMSSD plot, you can see for example that there are points where an rMSSD of 100 ms for the ECG device (i.e. the real rMSSD) corresponds to an rMSSD of about 10 ms for the earbuds.

In general, the vast majority of data points are way too far from the identity line, meaning that this device is not any good for this task. If you have worked on this device, please don’t take this personally. I am just using it as an example to show how to validate a device, and what to look for in a validation. I do appreciate that the authors have published these plots, which are very informative, instead of just summary tables that would prevent us from being able to fully capture the ability (or inability) of the device to measure HRV.

Keep in mind that this is likely to be the quality of many of the devices that are on the market and for which validations are not present or shown.

Many more issues arise at this point, from the false promise of continuous measurement to the interpretation of this noise as stress or else (I discuss these points in more detail here).

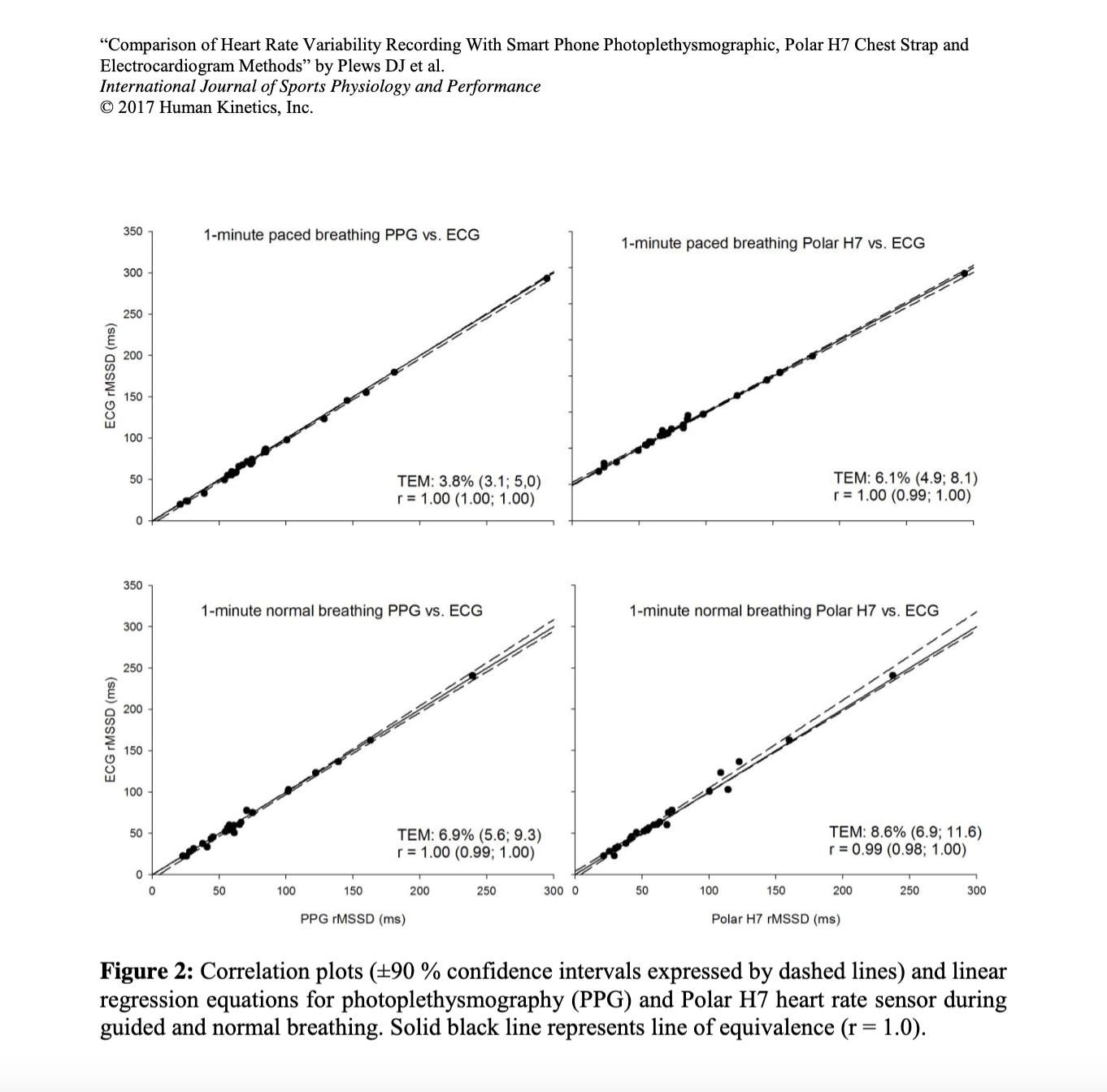

How should the data look? Here is our validation of HRV4Training, looking at the accuracy of the camera-based measurement and of Polar chest straps against ECG. As we can see the data falls almost perfectly on the identity line (full text of the paper, here):

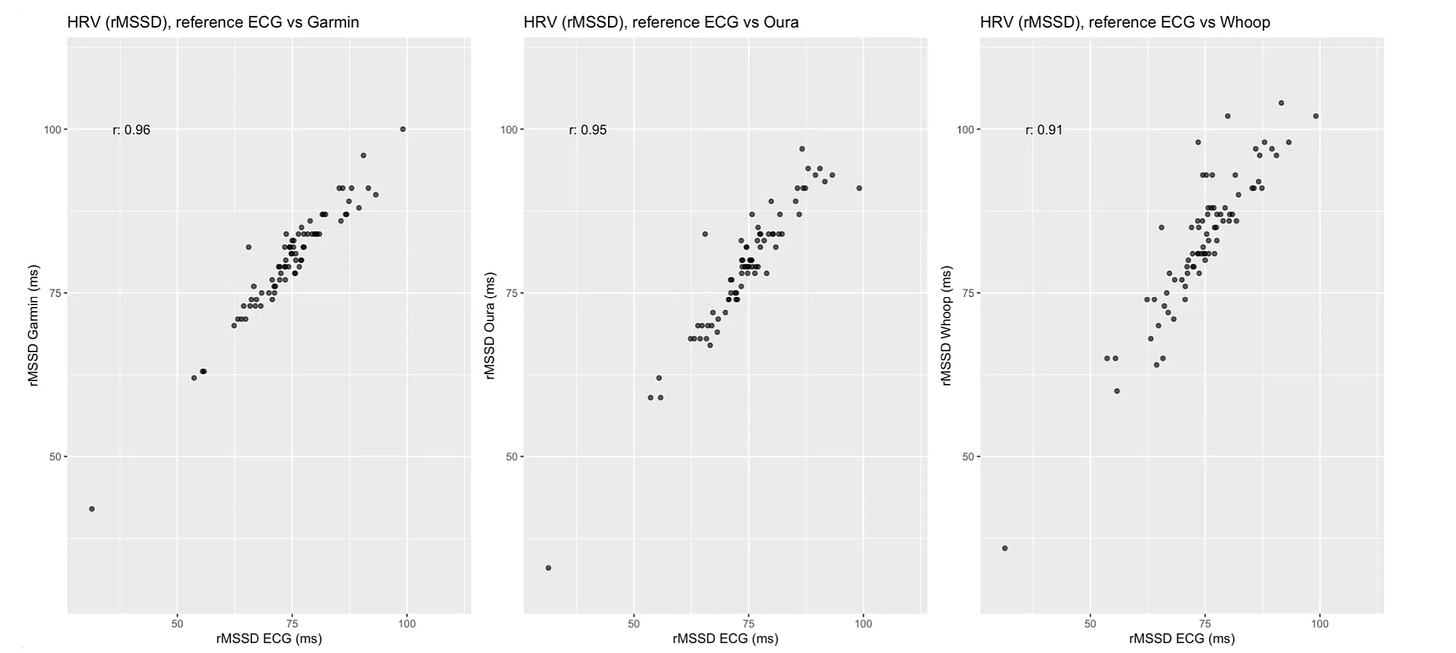

Below is another validation I carried out for various wearables, which is a bit more noisy, but still decent. While the Whoop is a bit noisy, especially for higher values, all sensors capture quite well the rMSSD of the reference ECG device:

There are no clouds of points in there.

When you see a new device or app on the market, look for validations and understand how to assess them. You want to see the data, not percentages of accuracy, which is rather meaningless information when it comes to HRV.

If you cannot find a validation, and the company cannot provide one, it's an easy pass. No need to invest our resources there.

Finally, at this point, there's enough good technology out there for a proper assessment of your resting physiology at a meaningful time, that our interest and energies should be focused on making use of the data more effectively, as opposed to whatever it is that we are doing these days with gadgets and “stress estimates”.

Keep it simple. Get a good device. Use a good protocol (i.e. measure first thing in the morning, far from stressors, while seated, for the most sensitive assessment of your physiological stress level), and aim for stability.

Marco holds a PhD cum laude in applied machine learning, a M.Sc. cum laude in computer science engineering, and a M.Sc. cum laude in human movement sciences and high-performance coaching.

He has published more than 50 papers and patents at the intersection between physiology, health, technology, and human performance.

He is co-founder of HRV4Training, advisor at Oura, guest lecturer at VU Amsterdam, and editor for IEEE Pervasive Computing Magazine. He loves running.

Social:

Twitter: @altini_marco.

Personal Substack.